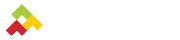

Single prompts often fail at handling complex tasks. They try to make AI models do everything at once – understand requirements, generate results, and validate outputs. This approach struggles with reliability, especially for multi-step challenges. Instead, flow engineering, which breaks tasks into smaller, validated steps, delivers better results.

- Single prompts: Quick but unreliable for complex tasks; prone to errors.

- Flow engineering: Multi-step workflows with validation at every stage; more accurate and easier to maintain.

- Key data: A 2024 study showed GPT-4’s accuracy jumped from 19% (single prompt) to 44% using a multi-step method.

Bottom line: For simple tasks like summarizing text, single prompts work fine. For high-stakes, multi-step operations like code generation or financial modeling, flow engineering is the smarter choice.

What is Prompt Engineering and Where It Falls Short

Prompt Engineering Basics

Prompt engineering involves crafting precise input instructions to steer AI models toward desired outputs. The process often includes providing examples and clear guidance directly within the prompt – a method known as In-Context Learning. Think of it as writing a set of instructions detailed enough to get the AI to deliver exactly what you’re looking for.

Some widely-used techniques include few-shot learning, where you show the model examples of the outcome you want, and chain-of-thought prompting, which encourages the model to explain its reasoning step by step. These methods work well for straightforward tasks like drafting emails, summarizing text, or generating basic code. The appeal? You don’t need to train the model – just refine the instructions.

Why Single Prompts Struggle with Complex Tasks

While prompt engineering can handle simple tasks effectively, it often falters when faced with more complex challenges. The issue lies in how large language models (LLMs) process information. They excel at System 1 thinking – quick, intuitive pattern recognition – but struggle with System 2 thinking, which requires deliberate planning, exploring alternatives, and revising decisions. Single prompts simply aren’t equipped to support this kind of structured reasoning.

"LLMs excel at pattern-matching (System 1 thinking) but struggle with structured reasoning (System 2 thinking). This fundamental limitation makes single prompts unreliable for multi-step problems." – Rohan Balkondekar, Lyzr

Here’s the math: even if an AI achieves 90% accuracy on individual steps, chaining five steps together results in less than 60% overall reliability. And since current function-calling accuracy is still below 90%, relying on single-prompt solutions becomes impractical for production-grade software. This is why companies like Lindy.ai and Rexera abandoned single-prompt strategies in January 2025. They pivoted to structured, multi-step workflows, which significantly boosted reliability.

Some developers attempt to overcome these limitations by creating extremely long prompts – sometimes exceeding 2,000 tokens – to cover every possible scenario. However, these prompts often become brittle, difficult to maintain, and nearly impossible to scale.

These challenges highlight the need for a more systematic approach, which leads us to the concept of flow engineering – a topic we’ll dive into next.

Flow Engineering: Multi-Step Workflows for Better Results

Flow Engineering Defined

Flow engineering is all about designing workflows where AI models handle complex tasks in multiple stages, rather than trying to nail everything in a single attempt. By breaking tasks into smaller, more manageable steps – each with its own validation, feedback, and error-checking – this method simplifies debugging and makes maintenance easier.

This approach tackles the shortcomings of single-prompt strategies by focusing on reliability. It shifts AI processes from System 1 thinking (quick, automatic decisions at the token level) to System 2 thinking (thoughtful planning and exploring alternatives). Flow engineering also builds progressive context, starting with simpler tasks like self-reflection before moving on to more challenging ones, such as generating complex code. This ensures the model has enough context to deliver better results.

How Flow Engineering Operates

Flow engineering relies on structured, step-by-step processes to improve reliability. Here’s how it works: the AI generates an initial output, tests it in real-world conditions, receives feedback (like error messages or test results), and then refines its output. This iterative cycle continues until the desired quality is achieved.

Take, for example, the work of researchers Tal Ridnik, Dedy Kredo, and Itamar Friedman in January 2024. They developed AlphaCodium, a multi-stage iterative system for generating code. By integrating problem reflection and a "test-based, code-oriented" workflow, they boosted GPT-4’s accuracy on the CodeContests dataset from 19% to 44%. Their work proved that iterative workflows outperform single-prompt methods, especially for demanding programming tasks.

A key feature of this method is the use of test anchors, which lock in successful outputs. Each iteration must meet these benchmarks to prevent regressions. To further improve reliability, the system uses double validation – asking the AI to regenerate outputs to catch errors – rather than relying on simple yes/no checks.

"Flow Engineering elevates prompt engineering, by breaking tasks into smaller steps and prompting the LLM to self-refine its answers, leading to enhanced accuracy and better performance." – Rohan Balkondekar, Lyzr

In February 2024, Lyzr applied flow engineering through its Language Agent Tree Search (LATS) framework to streamline complex enterprise workflows. By combining steps like selection, expansion, evaluation, and simulation, they achieved a 94.4% success rate on the HumanEval benchmark – a significant leap from GPT-4’s baseline of 67.0%, marking a 27.4% improvement.

Prompt Engineering vs Flow Engineering: Side-by-Side Comparison

Prompt Engineering vs Flow Engineering: Key Differences and Performance Metrics

Key Differences at a Glance

Expanding on the advantages of effective workflow design, let’s compare prompt engineering and flow engineering directly. The performance gap between these approaches is measurable. Prompt engineering relies on treating the large language model (LLM) as a single decision-maker, while flow engineering coordinates multiple steps with built-in quality assurance at each stage.

The distinction in reliability becomes clear during practical application. Single prompts depend on "System 1" thinking – quick, automatic, token-based decisions that work for straightforward tasks but falter with complexity. On the other hand, flow engineering enables "System 2" thinking, involving deliberate planning, exploring alternatives, and revisiting steps when necessary.

Here’s a breakdown of their differences across key aspects:

| Feature | Prompt Engineering | Flow Engineering |

|---|---|---|

| Interaction Model | Single-turn / Direct | Multi-stage / Iterative |

| Reliability | "Prompt-and-Pray" (Low) | Structured / Deterministic (High) |

| Complexity Handling | Monolithic (struggles with edge cases) | Modular (breaks tasks down) |

| Error Correction | Manual iteration | Automated self-refinement/testing |

| Scalability | Difficult to maintain/debug | Easier to version and test |

This comparison highlights why flow engineering is better suited for creating robust, production-ready systems.

A key advantage of flow engineering is its modularity. Single prompts often evolve into unwieldy "God Prompts" – massive, 2,000-token configurations that try to do everything but end up failing at even simple tasks. Flow engineering avoids this by ensuring each step focuses on a specific task, making it easier to pinpoint and resolve errors without disrupting the entire system.

"The prompt-and-pray model – where business logic lives entirely in prompts – creates systems that are unreliable, inefficient, and impossible to maintain at scale." – Hugo Bowne-Anderson and Alan Nichol

Using single prompts in a chained process significantly raises the likelihood of errors. Flow engineering mitigates this by incorporating validation at every stage, stopping mistakes before they ripple through the workflow.

sbb-itb-32a2de3

AlphaCodium Case Study and Business Results

AlphaCodium Research: From 19% to 44% Accuracy

In February 2024, a team of researchers led by Tal Ridnik published the AlphaCodium study, showcasing the measurable benefits of flow engineering. Their work tested GPT-4 on the CodeContests benchmark – a dataset designed with private input–output tests to prevent false positives.

Here’s the standout result: GPT-4 achieved 19% accuracy under single-prompt conditions. But when AlphaCodium’s multi-stage flow was applied, accuracy jumped to 44% – more than doubling its performance without changing the AI model itself.

The AlphaCodium flow divides code generation into clear, structured stages:

- Problem Reflection: Breaking down goals, inputs, outputs, rules, and constraints into bullet points.

- Public Test Reasoning: Explaining why specific test inputs lead to their outputs.

- Solution Generation and Ranking: Developing 2–3 possible approaches in natural language and selecting the strongest one.

- AI-Generated Test Enrichment: Adding 6–8 diverse edge cases to identify potential blind spots.

- Iterative Code Refinement with Test Anchors: Refining the code step by step, using previously passed tests as anchors to prevent regression.

"AlphaCodium – a test-based, multi-stage, code-oriented iterative flow… consistently and significantly improves results." – Tal Ridnik, Lead Author

This method doesn’t just improve accuracy; it also reduces operational costs significantly. AlphaCodium achieved results comparable to DeepMind’s AlphaCode but required far fewer LLM calls – around 100 compared to 1,000,000. This massive reduction in API calls means lower costs and faster execution times, making it ideal for production environments. These technical efficiencies have paved the way for meaningful business outcomes, especially in demo-to-close scenarios.

Business Impact: Demo-to-Close Rates and Revenue Operations

The technical advancements of multi-step flows have translated into clear business benefits. By shifting from single prompts to multi-step flows, companies are producing outputs that are more reliable and ready for production, cutting down on rework and boosting efficiency. These improvements directly affect conversion rates and operational costs.

For example, Lyzr reported a significant win for one of their clients, who saw their demo-to-close rate soar from 15% to over 40% after replacing single-prompt AI interactions with multi-step, automated follow-up workflows. This project used Lyzr’s DataAnalyzr and Automata frameworks to design modular, agent-based workflows. At critical points, human-in-the-loop supervision ensured follow-ups were timely, relevant, and personalized – delivering information that resonated with prospects.

This isn’t an isolated success. Across industries, 70% of B2B deals fail within 48 hours after a demo due to issues like missed follow-ups, generic outreach, or slow response times. Flow engineering addresses these problems by embedding validation steps, error handling, and feedback loops into workflows, ensuring nothing slips through the cracks.

The financial case for flow engineering becomes even more compelling when you factor in the cost of rework. While single-prompt approaches might seem cheaper upfront, the need for manual corrections or complete regeneration of outputs quickly adds up. Companies adopting flow engineering report 2–3× improvements in output reliability for complex tasks. This means fewer support tickets, faster time-to-value, and happier customers – all of which contribute to stronger business performance.

When to Use Each Approach

Choosing between prompt engineering and flow engineering comes down to the complexity of the task and the level of reliability required.

Prompt Engineering for Quick, Simple Tasks

Prompt engineering works best for straightforward, single-step tasks like drafting content, answering FAQs, summarizing basic information, or extracting simple data. It’s fast, cost-effective, and avoids the need for complex setups. Plus, it allows for quick iterations without much overhead.

For example, if you’re brainstorming blog post ideas, crafting social media captions, or pulling general insights from customer feedback, a well-designed prompt can give you a solid starting point. These outputs often need just a little human refinement, making this approach perfect when speed is a priority over absolute precision. Curious about improving your AI workflows? Sign up for our AI Acceleration Newsletter to stay updated with the latest tips.

That said, when a task demands flawless accuracy, a more robust method is necessary.

Flow Engineering for Multi-Step, Production-Ready Work

On the flip side, flow engineering is the go-to choice for high-stakes, multi-step tasks where precision is non-negotiable. Think of code generation, database queries, financial modeling, or customer-facing automations – these require structured workflows with built-in validation at every step. In such cases, even a small mistake can have serious consequences, making the additional cost of iterative API calls worthwhile.

Flow engineering ensures a higher level of reliability by breaking down tasks into manageable steps, validating outputs, and addressing edge cases. For example, if your business depends on timely, personalized customer follow-ups to close deals, you can’t rely on a “set-it-and-forget-it” prompt. This method separates conversational AI from the deterministic processes that require strict accuracy, providing the control and precision needed for production environments.

If you find yourself frequently correcting outputs manually, it’s a sign to shift to flow-based workflows. These systems are designed to handle errors, validate results, and ensure smooth execution without constant human intervention.

Moving Beyond Single Prompts to Workflow Design

Many companies spend weeks fine-tuning prompts and tweaking settings, only to hit a wall when it comes to reliability. The real game-changer? Shifting focus from prompt optimization to system design. Looking to create AI workflows that actually boost revenue? Subscribe to our AI Acceleration Newsletter for weekly tips on implementing flow engineering – no engineering degree needed.

"AI implementation is a system design challenge, not a prompt optimization problem." – M Studio

The companies seeing 2–3x improvements in output reliability aren’t just refining prompts – they’re rethinking the entire system. Instead of relying solely on prompts, they design workflows where the language model (LLM) focuses on interpreting intent, while structured, testable code handles critical functions like validation, error recovery, and logic execution. By treating AI like traditional software – complete with version control, testing, and fault tolerance – these businesses move beyond demo-level outputs to systems that are ready for real-world production.

Key Takeaways

Flow engineering improves reliability by emphasizing separation of concerns. Let the LLM handle language tasks while your workflows manage validation and error handling. It’s not about making the AI "smarter" – it’s about making your systems more dependable.

This approach isn’t just theory; it’s backed by data. AlphaCodium, for instance, reported significant accuracy gains using this method. Similarly, the StateFlow paradigm delivered success rates 13% to 28% higher than single-prompt approaches, all while cutting costs by 3× to 5×. These results highlight the difference between systems that constantly need human intervention and those that can operate reliably in production.

Steps to Build Reliable AI Workflows

If you’re spending too much time fixing AI outputs, it’s time to rethink your strategy with flow engineering. Start by identifying where your current prompts introduce risks or lead to high debugging costs. Focus on tasks where errors could have serious consequences – like customer communications, financial calculations, code generation, or data processing.

For businesses generating $1M+ in annual revenue and ready to adopt production-grade AI workflows, M Studio offers live sessions to design and deliver fully operational systems. Learn more about our approach to AI and GTM implementation to see if it aligns with your goals.

The shift from prompt engineering to flow engineering isn’t about sidelining LLMs. Instead, it’s about integrating them into carefully designed workflows that manage complexity and ensure reliability. This is how you turn AI into a dependable tool for your business.

FAQs

What makes flow engineering more effective than prompt engineering for complex tasks?

Flow engineering takes a thoughtful approach to designing multi-step workflows, incorporating validation, feedback loops, and error handling. Unlike single-prompt methods, this structured process breaks tasks into manageable steps, significantly boosting accuracy and reliability. For instance, studies have shown that using iterative workflows can increase code-generation accuracy from 19% to 44%, thanks to consistent checks and refinements along the way.

But it’s not just about better accuracy – flow engineering also transforms AI into a more dependable tool for tackling complex problems. By enabling structured reasoning, it goes beyond simple pattern matching, turning AI into a powerful problem-solver. Businesses adopting this approach reap real rewards: fewer errors, quicker implementation, and more effective outcomes. Many companies find that flow-engineered AI pipelines streamline operations and improve success rates on critical tasks, making it an essential strategy for scaling AI with confidence.

How does flow engineering enhance AI performance for complex tasks?

Flow engineering enhances AI accuracy by implementing structured, multi-step processes like validation, iterative adjustments, and feedback loops. These steps enable large language models (LLMs) to evaluate, refine, and improve their outputs, effectively tackling the shortcomings of single-prompt methods.

This approach has demonstrated impressive results, including a 19% to 44% boost in accuracy for complex coding tasks. By dividing tasks into smaller, more manageable steps, flow engineering not only ensures more dependable outputs but also minimizes errors and the need for rework, making results more production-ready.

When should I use flow engineering instead of prompt engineering?

When dealing with tasks that involve multiple steps, require high precision, or depend on structured reasoning, flow engineering is the way to go. While single prompts are fine for simple, straightforward tasks, more complex workflows – like multi-step code generation or data validation – demand a more iterative approach. This includes error handling and feedback loops to ensure dependable results.

Take code generation as an example. Research has shown that transitioning from single-prompt methods to flow engineering can more than double the accuracy of outputs. By using this approach, you can cut down on rework, produce higher-quality results, and deliver outputs that are ready for production. If your task involves conditional logic, rigorous testing, or has critical business implications, flow engineering offers a more reliable path to consistent success.

Related Blog Posts

- Why Your $50M AI Investment Will Fail (And the 3 Questions That Would Have Saved It)

- I Spent 18 Months Watching Fortune 500s Waste AI Budgets. Here’s What Actually Works

- Future-Proof Hiring: Building AI-Augmented Teams for 2026

- What is a Flow Engineer? The New Role Between Prompt Engineering and AI Automation