On October 14th, during LA Tech Week, an unusual coalition converged at Esters Wine Bar in Santa Monica. M Studio co-hosted an evening with Escalon, Aperture VC, and JP Morgan, with support from AI 2030, bringing together 50 builders, investors, and innovators for a conversation that tech hubs rarely have:

What does responsible AI actually mean when you’re the one building or deploying it?

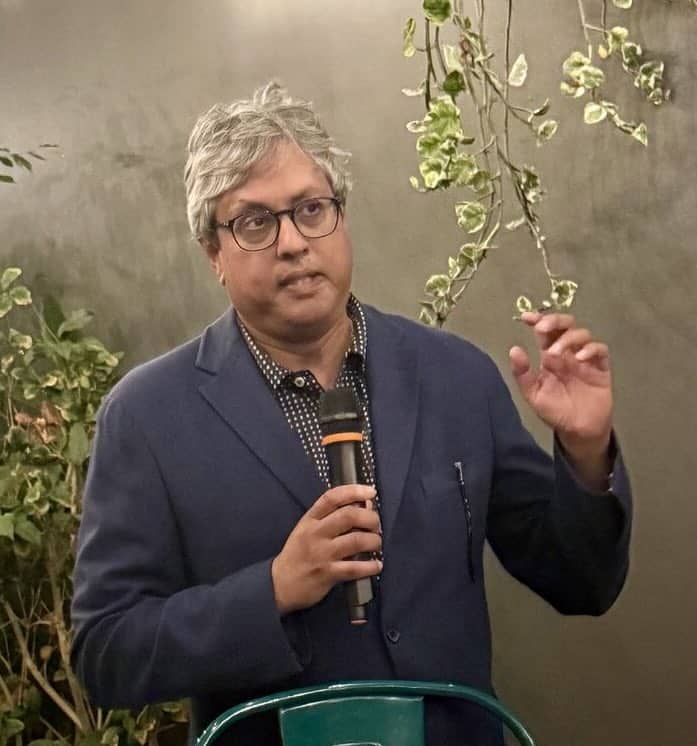

The centerpiece of the evening was a fireside chat between Alessandro Marianantoni, founder of M Studio, and Meghna Sinha—Chief AI Officer at Kai Roses and Distinguished Fellow at AI 2030. Meghna brings 26 years of AI implementation experience at Fortune 50 companies, including Verizon and Target, having delivered over $1 billion in measurable value. If anyone understands where AI initiatives succeed and fail, it’s her.

The Co-Hosts

This wasn’t just another tech event. The evening brought together an intentional mix of perspectives—each co-host representing a different dimension of how technology scales from idea to impact.

Escalon, as the evening’s primary sponsor, provides the operational backbone for growth companies—the financial infrastructure, accounting, HR, and back-office systems that enable ventures to scale from startup to enterprise. They work with companies navigating rapid growth and the operational complexity that comes with it.

Aperture VC brings the venture capital perspective, investing in fintech and insurtech companies while maintaining a focus on diverse founders and markets often overlooked by traditional Silicon Valley investors. Based in Laguna Beach, they understand both the innovation ecosystem and the practical realities of building sustainable businesses.

JP Morgan represents institutional scale and the perspective of enterprise deployment. When you’re working with systems that touch millions of customers and operate across global markets, implementation isn’t just about innovation—it’s about integration with existing infrastructure.

M Studio operates as a venture studio, working with early-stage founders through M Accelerator while providing strategic consulting and GTM engineering to established companies. We see the full spectrum: the excitement of innovation and the reality of implementation.

AI 2030 provided support through their mission of mainstreaming responsible AI adoption globally. As a nonprofit focused on AI literacy and ethical frameworks, their involvement marked their first engagement with the LA community—an important step in bringing these conversations to major tech hubs.

Together, these perspectives created the context for a substantive conversation about building AI systems that actually work—not just technically, but sustainably.

The $400 Billion Question

Alessandro’s conversation with Meghna began with the fundamentals. When we talk about “responsible AI,” what does that actually mean in practice?

Meghna’s framework was clear: Responsible AI isn’t about compliance checkboxes or slowing down innovation. It’s about building systems that don’t accidentally destroy their own foundations.

She’s spent decades watching AI initiatives fail—not because the technology doesn’t work, but because something breaks between strategy and execution. The technology is rarely the problem. The problem is building without thinking through second-order effects.

This brought the conversation to the creator economy.

The Canary in the Coal Mine

“We’re pouring $400 billion into AI infrastructure this year,” Alessandro noted. “But these models were trained on creator content—often without compensation. What are we missing?”

Meghna’s answer reframed the entire conversation: The creator economy crisis isn’t isolated. It’s a preview.

When AI can generate content at scale without compensating the humans whose work trained it, we’re not just disrupting one industry. We’re establishing a pattern—a blueprint that will ripple across every sector. Healthcare. Logistics. Finance. Legal services.

As Meghna has written elsewhere, the creator economy is “the canary in the coal mine” for AI disruption. If we allow a system to become established where massive value is created by dispossessing initial creators, this blueprint will shape subsequent economies.

The question isn’t whether AI will disrupt your industry. The question is whether you’re building systems that cannibalize their own value chains.

Pattern Recognition Across Industries

Alessandro pressed further: What patterns does she see across different sectors?

Meghna’s work spans entertainment, healthcare, enterprise operations—and the pattern is consistent. AI disrupts wherever workflows are linear, deterministic, and governed by rules humans created to manage complexity.

But here’s what most people miss: Those workflows aren’t naturally deterministic. We made them that way through governance structures designed for human-only operations. When you introduce AI agents into these systems, those linear workflows break.

The companies that will succeed aren’t just deploying AI—they’re rethinking governance for mixed human-AI workforces. They’re asking: What metrics make sense when machines have agency? What compensation structures work when value creation involves both human creativity and AI scale?

For the builders and investors in the room, Meghna’s advice was pointed: Look at your current AI projects. Are you automating existing workflows, or are you rethinking the underlying structure? Because one approach optimizes the present. The other builds for sustainability.

What Responsible Actually Means

The conversation’s most provocative moment came when Alessandro asked about the biggest misconception people have about responsible AI.

“People think it slows you down,” Meghna said. “But responsible AI is actually your competitive advantage. It’s how you avoid building products nobody wants, or worse—products that work brilliantly until they don’t, because you built them on unsustainable foundations.”

She pointed to her Fortune 50 experience: The companies that delivered real value from AI weren’t the ones that moved fastest. They were the ones that took time to understand where the technology actually fit, who it served, and what happened to the workflows—and people—it touched.

Responsible AI isn’t about ethics as constraint. It’s about not wasting resources building the wrong thing.

The Cross-Sector Insight

What made this evening valuable wasn’t just Meghna’s expertise—it was what emerged from having venture capital, enterprise operations, institutional finance, startup acceleration, and AI ethics in the same room.

One Thing to Check This Week

Alessandro closed with a practical question: For everyone building or deploying AI, what’s the one thing they should check on their current projects?

Meghna’s answer: Ask where your training data came from, and what happens to the people or systems that created it.

Not because it’s a compliance requirement. Because if you’re building value by extracting it from somewhere else without replenishment, you’re building on quicksand. And $400 billion in infrastructure investment won’t save a foundation that collapses under its own weight.

Building LA’s Responsible AI Community

This conversation marked AI 2030’s first engagement with the LA community—a significant moment for bringing responsible AI frameworks to one of the world’s major tech hubs.

The energy in the room suggested this is exactly the kind of conversation LA’s tech ecosystem needs. Not hype. Not pitch fests. Practitioners from different sectors talking about what actually works and what actually matters.

The $400 billion infrastructure investment is happening regardless. The question is whether we build it on sustainable foundations—with transparent data sourcing, equitable compensation structures, and governance designed for human-AI collaboration, not human replacement.

The Future of Responsible AI was co-hosted by M Studio, Escalon, Aperture VC, and JP Morgan, with support from AI 2030. Special thanks to Randall Hall (Escalon), Garnet Heraman (Aperture VC), Martin Garcia (JP Morgan), and Xiaochen Zhang (AI 2030) for making this conversation possible, and to the 50 participants who showed up for substance over spectacle.